Show Focus

Designing the Future of Filmmaking

Wolf in Motion created a system using augmented and mixed realities to control cinema cameras

THE CHALLENGE

Simplify camera operation with a solution designed for extending filmmakers' craft rather than automatising it

THE OUTCOME

A hardware and software design that improves the filming experience by allowing for seeing and controlling the focus of the camera in 3D as the film is being shot.

AWARDS

iF Design Award 2022

D&AD shortlist 2022

Show Focus was initiated to address an issue commonly faced in the filmmaking industry, by Focus Pullers and Directors of Photography on high-end shoots to Vloggers and small camera crews. This issue is the time and economic costs of reshoots due to out-of-focus footage. In the case of documentaries, this also means lost content.

Part of the film crew’s camera department, the Focus Puller is the person whose responsibility is to maintain the lens’s optical focus on the subject or action that is being filmed. While most aspects of the image can be adjusted or corrected in post-production, a bad focus cannot. This is why Focus Pulling is often considered the most difficult job on set.

Focus Pulling requires years of training and preparation for each shot. Such preparation may include marking the scene physically, often with coloured tape on the ground and corresponding marks on the lens. However, these marks cannot always be used, as Focus Pullers need to keep their eyes on the action to make sure they can anticipate actors' movements. As a consequence, they often have to guess where the focus is in space and trust the kinesthetic memory of their fingers to find the right angle at the right pace.

Incidentally, brighter modern lenses and larger camera sensor surfaces induce shallower depth of field. Worse; ever-increasing image resolution makes the smallest focusing mistakes more noticeable: What could have been considered sharp enough in HD is barely acceptable in 4K and certainly unusable in 8K. To counterbalance this side effect of technological progress that impacts both filmmakers and photographers, camera manufacturers are constantly investing in improving autofocus solutions. Nowadays these incorporate advanced technologies such as eye tracking and machine learning to ensure that shots will be in focus when using the most demanding equipment. However, while autofocus can be great for spontaneity in some documentaries, it is much less appealing for fiction where it lacks the stylistic control and human touch of manual focusing.

As designers we believe in technology that allows for extending human's craft rather than replacing it.

That is why we set out to augment the human natural guessing ability and turn it into a new sense. A sense that would allow for truly knowing where the focus plane is at, any time, while keeping eyes on the action.

Process

From our interviews with Focus Pullers, vloggers and DOP’s we understood that on the whole, people who work with cameras are technophiles. They invest in new equipment, including niche products from new avenues like kickstarter. Some use unpolished/prototype/beta products and often adapt or hack their standardised products. They are result-oriented, primarily concerned with functionality, but will prefer options that are straightforward to use.

We hypothesised that the problem of out of focus shots were due to the lack of visual stimuli for the Focus Puller and built different prototypes to address that. Inspired by our experience with 3D animation we got interested in the possibilities offered by time-of-flight sensors and light-detection-and-ranging (LiDAR) systems.

In 2013, we created the first ever focusing mechanism using a spatial point cloud visualisation with a 3D representation of the focus plane. We obtained a decent focusing precision of around 1cm on a 1-10m range but the technology at that time would be too delicate to deploy on a real set.

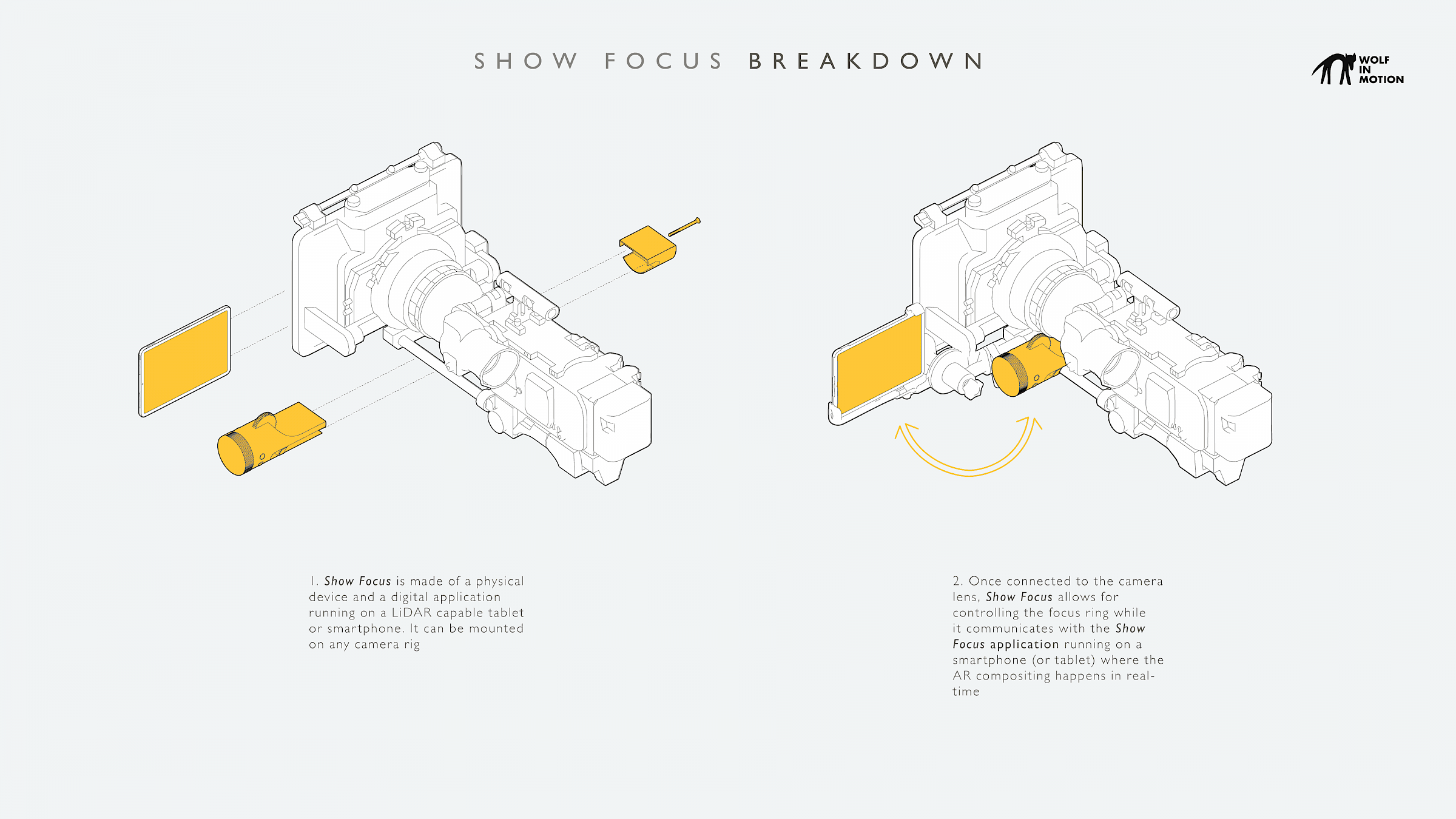

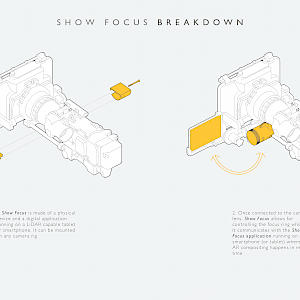

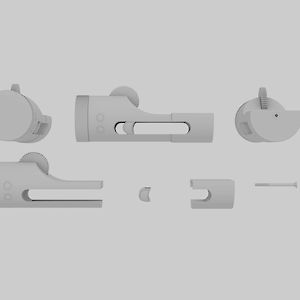

In 2020, we saw the introduction of LiDAR on the Apple iPad Pro as an opportunity to revisit our earlier work and improve our solution. We designed a simpler camera mount to act as a bridge between the focus ring and the Show Focus software turned iOS application.

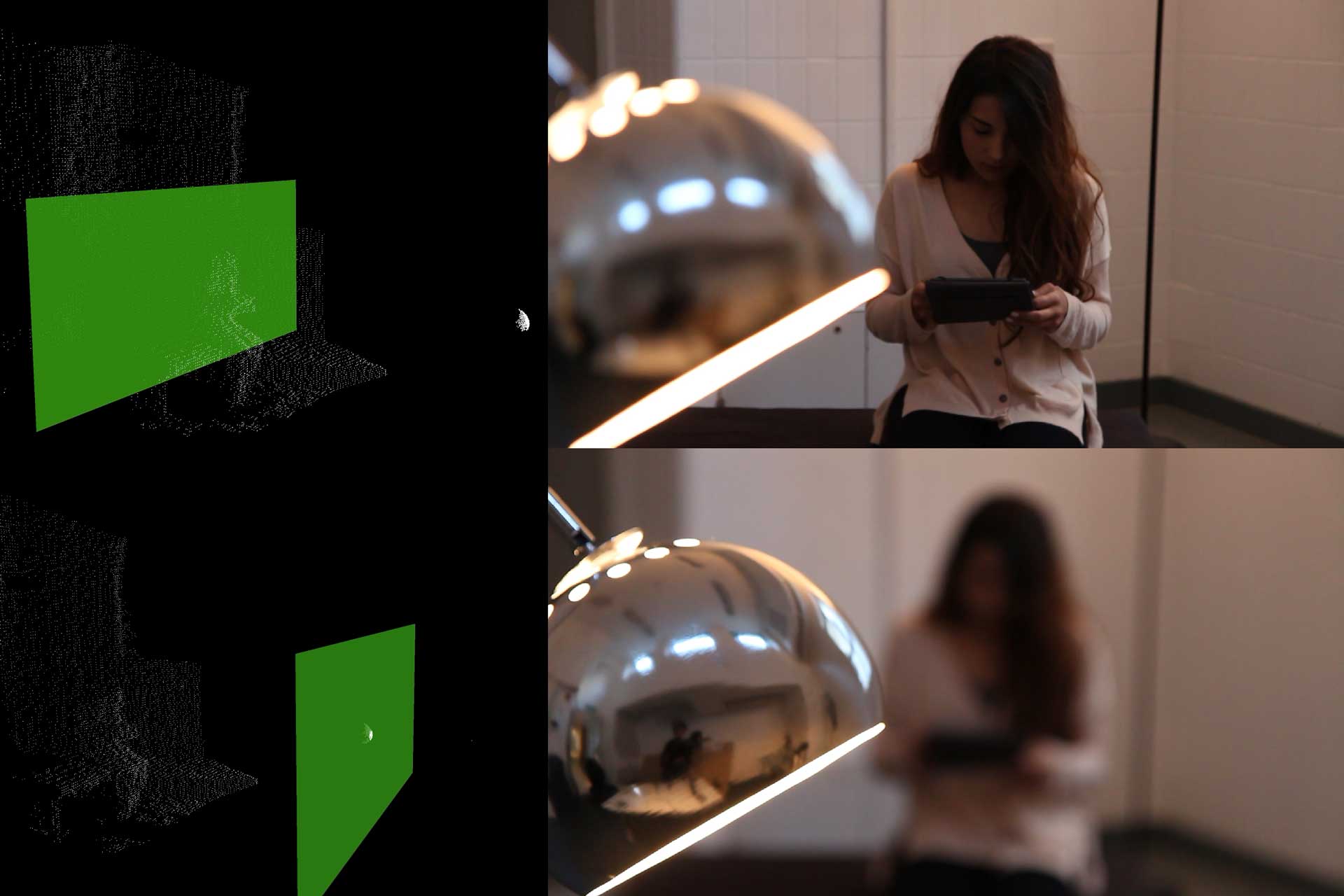

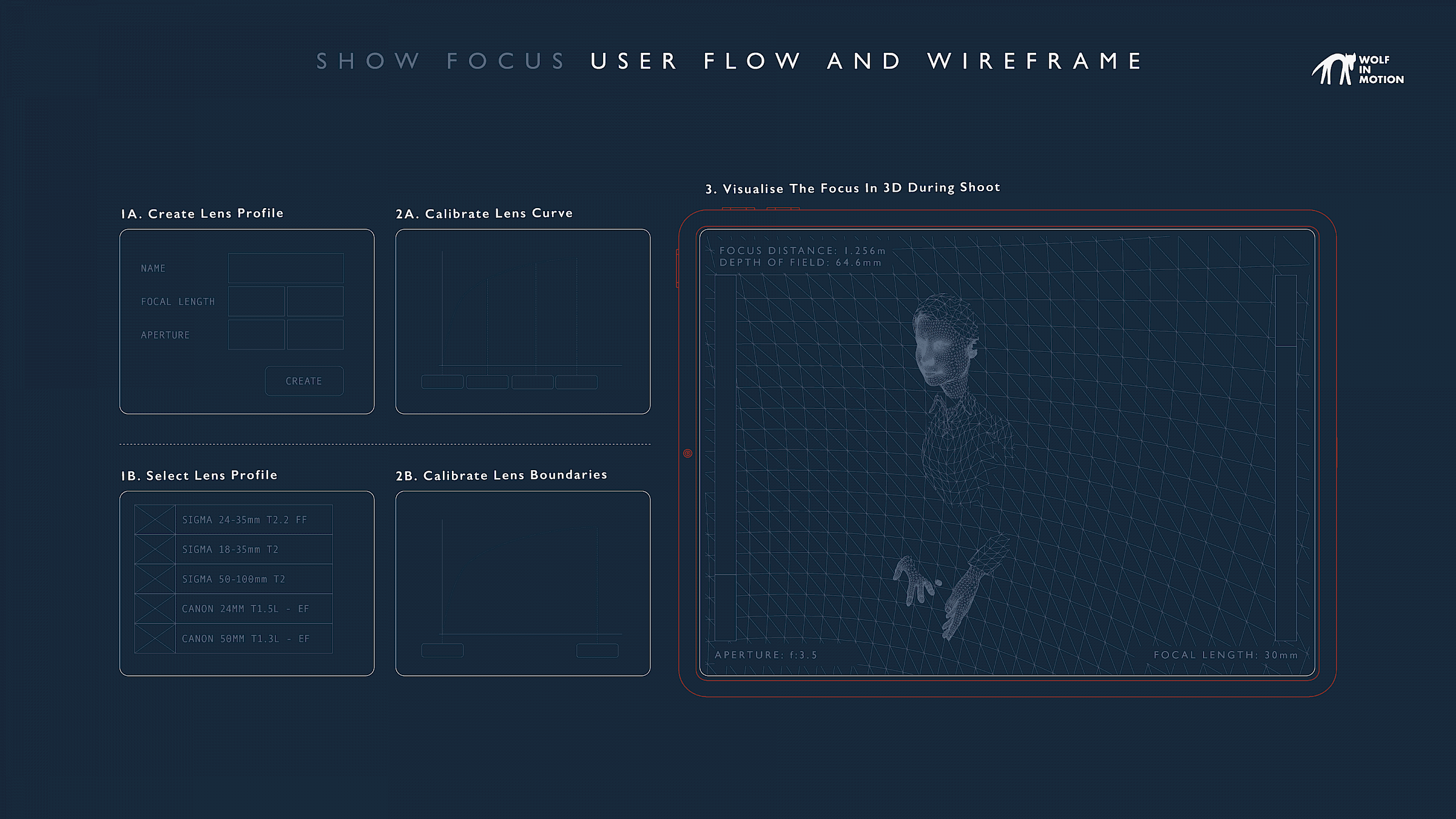

For the first prototypes, we modified a manual ‘follow focus’ mechanism to be able to operate the focus ring while encoding the changes in the ring rotation. These are sent through Bluetooth (BLE) to our app where they are turned into spatial coordinates of the focus plane (following calibration). There, the LiDAR meshing of the scene allows for compositing the focus plane and depth of field in augmented reality. Depending on the iPad’s mounting position, the composite view is displayed via a point of view that is close to the camera operator's own point of view. In this composite view, a plane shows the focus of the main camera: Everything that intersects this plane is in focus at the intersection.

Our most recent developments comprise a motorised system to allow for two-ways interaction. As with the earlier versions, the Focus Puller can manually rotate the focus ring and see the focus plane move accordingly. Alternatively, they can move the focus plane representation directly by touch-dragging it on the iPad screen. This will then rotate the focus ring, thus moving the real focus plane automatically.

Combined with hand tracking and mixed-reality glasses, this opens the door to numerous new interaction possibilities that will redefine the way cinema and photography cameras are operated.

- The focus plane, for instance, can now be moved directly in the scene with one hand

- Reducing or increasing the depth of field can be done by dragging the front and back planes of acceptable focus

- As for dealing with the field of view or lens’ angle, it becomes as intuitive as resizing a rectangle frame.

If we consider film production at large and the increasing place taken by CGI and compositing, there are other aspects of filmmaking that require a level of abstraction prone to lead to composition errors. Scene blocking for later 3D compositing is one of them. In essence, the actors and the crew have to imagine the virtual elements of the final scene without really seeing them (in the best cases, these appear on a previsualisation monitor). As an integrated 2-ways communication system bridging what the camera and the crew can see or do, Show Focus means that compositing can be managed as the scene is being shot.

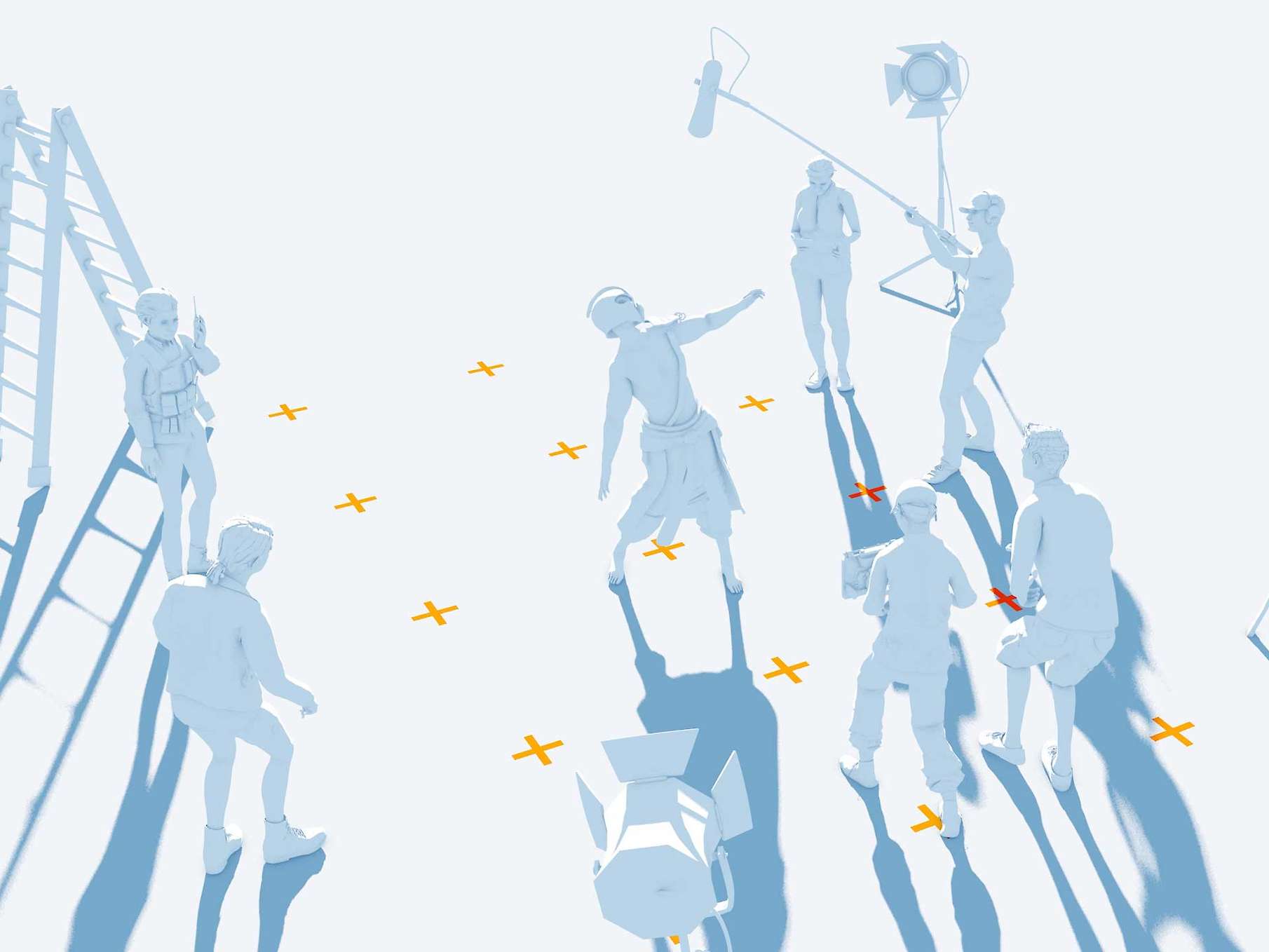

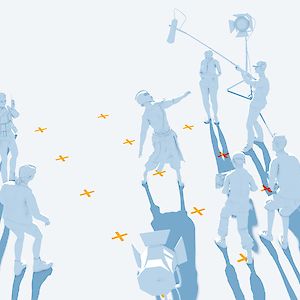

Image above: Film set where 3D compositing and VFX are synchronised with the cinema camera using Show Focus

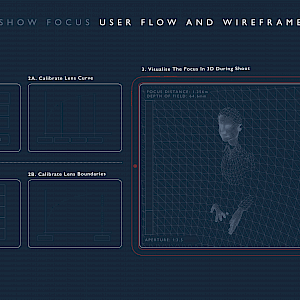

Image above: User flow and wireframe of the Show Focus iOS application

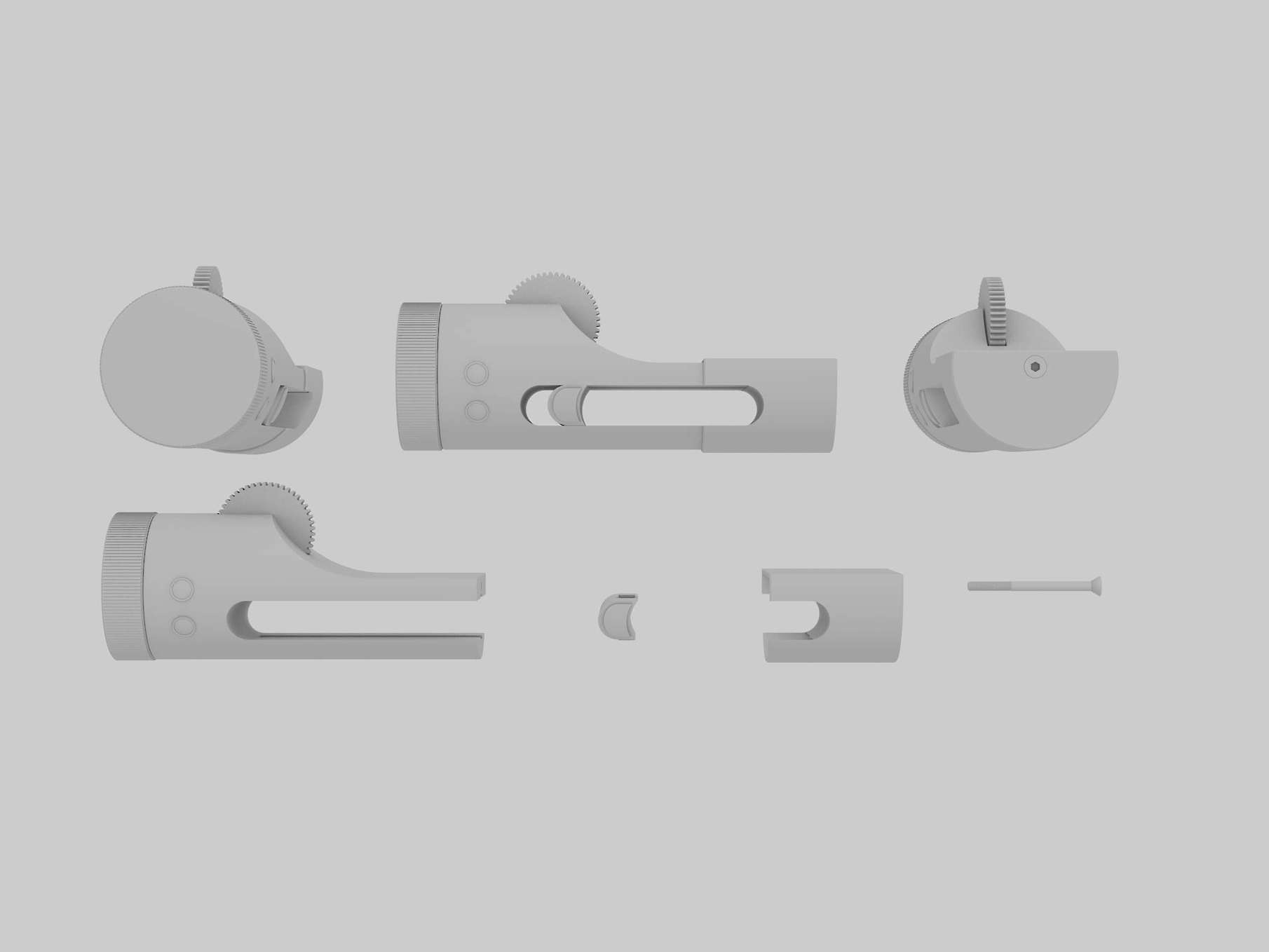

Image above: Rendering of the Show Focus hardware design

Contributors

Samuel Iliffe, Kevin Cobb, Giulio Amendola, Kate Thackara, Esmeralda Atzamalidou and Guillaume Couche

Contact

A question, an idea or a suggestion?

And follow us on Instagram to get the latest updates